Historical Roots of Phishing

Phishing began in the 1990s with spoofed emails that tricked users into giving up login credentials. Early cybercriminals targeted AOL customers with fake support notices, harvesting account passwords and payment data. This evolved into similar scams impersonating PayPal and eBay, warning users of bogus account issues. By the 2010s, criminals launched highly targeted “Business Email Compromise” (BEC) attacks: they harvested personal details (often from social media) to craft emails appearing to come from executives or colleagues. These tailored BEC scams proved very effective, the FBI notes that phishers frequently include accurate victim information (from blogs, social networks, etc.) to boost legitimacy. In short, each advance in technology (from AOL accounts to corporate email) has been quickly exploited by phishers for more convincing frauds.

Phishing in the Blockchain Era

Blockchain introduced irreversible payments, transparent ledgers, and a culture of open online communities, perfect terrain for new lures:

- ICO & Token-Sale Clones. During the 2017 boom, scammers hijacked Slack or Telegram channels to post “official” contribution addresses. A single Slack compromise of the Enigma project netted ≈1 500 ETH.

- Token Drainers. Malicious dApps trick users into signing blanket

approve()transactions, then empty wallets moments later. Drainers surged in 2022–24, costing users hundreds of millions as open-source kits spread on Telegram. - Rug-Pull Interfaces. Copy-paste DeFi contracts launched with flashy front-ends promise high APY, attract deposits, and vanish once TVL peaks. Flash-loan volume inflation and paid influencer tweets make these schemes appear legitimate until the final drain.

- Domain & ENS Spoofing. Look-alike

.ethnames or Unicode-padded domains funnel users to counterfeit wallet-upgrade portals. - DAO & Governance Phish. Delegates and multisigs receive tailored spear-phish links that request “urgent vote-signing” and, instead, sign malicious calldata.

Unlike traditional phishing attacks focused on obtaining passwords, blockchain phishing targets off-chain generated transaction signatures, which is why protocols should pair user education with a smart contract audit. Once submitted to the blockchain, these malicious transactions are irreversible, making timely detection and prevention critical.

Web3 Threat Surface

Web3 introduces new attack surfaces for phishers, beyond the traditional email and websites of Web2. Here we break down a few technical vectors specific to blockchain and crypto ecosystems that scammers exploit:

- Exchange data-breach impersonation (Coinbase 2025): Attackers acquired extensive personal customer data from Coinbase, partially through bribing overseas support agents. Using details such as names, balances, partial Social Security numbers, and transaction histories, scammers executed sophisticated email and voice phishing (vishing) campaigns. Victims received highly personalized messages and follow-up calls impersonating Coinbase support, convincingly referencing private financial information. Victims were then guided into transferring assets to purportedly "secure" self-custody wallets, which scammers immediately drained. Losses exceeded $46 million in March 2025 alone, including one victim who lost 400 BTC. Coinbase publicly disclosed the breach in May 2025 and offered a $20 million bounty for information leading to arrests.

- Address Poisoning: Address poisoning is a phishing-adjacent tactic that manipulates how users typically interact with wallet addresses. Rather than seeking credentials or approvals, attackers rely on UI familiarity and human error. The scam involves sending a 0-value transaction from a spoofed address that visually mimics one the user has recently interacted with. Since most wallet UIs truncate addresses (e.g., showing only the first and last few characters). This often happens when users copy addresses from block explorers like Etherscan, which is a deeply unsafe practice: poisoned addresses appear in the transaction history and can be mistaken for previously used or trusted recipients.

- Scam Tokens: Scam tokens are unsolicited assets that appear in a user’s wallet, often with names or metadata encouraging interaction. These tokens may include embedded phishing URLs, and interacting with them (such as approving them for trade or viewing them in certain dApps) can trigger malicious behavior. Some tokens even imitate known projects to build credibility and encourage clicks.

- Honeypot tokens: Honeypot tokens can be purchased normally on decentralized exchanges, but block or severely penalize selling. These tokens may include logic that restricts

transferFrom()unless the sender is the token deployer or contract owner, or apply extreme sell taxes that leave users with nothing. This gives victims the illusion of holding a growing token balance with rising value, until they attempt to exit and realize it’s impossible. Some honeypots even include fake volume, bots, or chart manipulation to appear legitimate. - Rugpulls: Rugpulls are exit scams where project creators promote a token or platform, attract liquidity and users, and then remove all value or disable utility. Attackers often build trust with short-term legitimacy: deploying audited-looking contracts, paying influencers, and offering high APY to bait deposits. Once total value locked (TVL) reaches a peak, the exit is executed.

- Pig Butchering Scams: These long-con investment scams have existed for years, well before Web3, and remain among the most profitable scam types. Attackers build extended emotional or trust-based relationships with victims through social media or dating platforms, eventually guiding them into fraudulent crypto investment schemes. In the AI era, generative tools significantly enhance this scam vector by automating personas, crafting fluent multilingual dialogue, and scaling outreach efforts.

- Wallet protocols and apps: Attackers clone or abuse wallet interfaces (like WalletConnect, MetaMask) to steal keys or approvals. For example, in 2024 researchers found a malicious crypto wallet app on Google Play that mimicked WalletConnect. By spoofing the trusted WalletConnect name and even using fake positive reviews, the app was downloaded ~10,000 times and drained ~$70,000 from about 150 users.

- Social network impersonation: Crypto communities converge on platforms like Twitter, Discord, Telegram, and specialized forums. Phishers exploit this social graph by posing as project teams or influencers on these channels. They might create fake official-looking announcements or direct-message links to scam sites. Because many crypto profiles are public, attackers can tailor messages using on-chain and social info. (For instance, scammers often pay bots to like, share or reply to create an aura of legitimacy). Fake airdrops are frequently distributed through these impersonation efforts, scammers copy trusted project branding to advertise giveaway tokens and urge users to 'claim' them via phishing websites. These fake claims often mimic real token airdrops, tricking users into signing malicious transactions.

- Blockchain naming services (ENS/SNS): Decentralized domain systems (like Ethereum Name Service, “.eth” addresses, or Solana Name Service) give attackers new hooks. Scammers have sent phishing emails to ENS name owners claiming their domains were expiring. Victims who clicked were steered to counterfeit renewal sites that stole funds. In general, any Web3 username or DNS record can be spoofed or weaponized in a phishing lures.

- DAO governance: Decentralized Autonomous Organizations rely on token-holder voting, but voting itself can be attacked. Phishers may seed malicious proposals or target key delegates. In mid-2024, a MakerDAO governance delegate was tricked into signing multiple phishing transactions; as a result, he lost about $11 million in assorted tokens. This case highlights that even critical Web3 insiders (DAO delegates, contract administrators, etc.) are at risk of cleverly social-engineered prompts.

Each of these vectors is inherently tied to blockchain: they either exploit on-chain mechanisms (smart-contract approvals, name services) or the crypto ecosystem’s social infrastructure (Discord servers, Telegram chats, wallet GUIs).

LLM Architecture Primer

Large Language Models (LLMs), such as ChatGPT and Anthropic products, are AI systems trained on massive datasets to generate human-like text. They excel at producing coherent, contextually accurate content from simple prompts. For phishers, this capability means they can effortlessly generate highly realistic and persuasive communications, emails, chat responses, or website text, in virtually any language. A short prompt specifying a particular tone or brand identity quickly yields content indistinguishable from legitimate communications, significantly boosting scammers’ effectiveness at scale.

The Rise of AI-Enhanced Phishing

Generative AI significantly enhances traditional blockchain scams by automating and personalizing social engineering:

- Pig Butchering Scams: With the rise of generative AI, executing these scams at scale has become easier and more convincing. Attackers now automate personas, craft fluent multilingual dialogue, and use AI chatbots to maintain long-term emotional manipulation across platforms, dramatically increasing the reach and efficiency of these frauds.

- AI-Generated Investment Promotions: Deepfake-generated videos or AI-crafted impersonations convincingly depict crypto project founders or influencers announcing fake token investment opportunities, misleading users into investing in fraudulent schemes.

- Automated, Personalized Scams: AI chatbots deployed on Telegram and Discord channels impersonate legitimate support representatives, guiding users through complex phishing scenarios around the clock without human intervention.

- Mass-Generated Phishing Domains: Attackers use LLM-generated text to rapidly deploy numerous convincingly customized phishing websites targeting diverse user groups across multiple languages and regions.

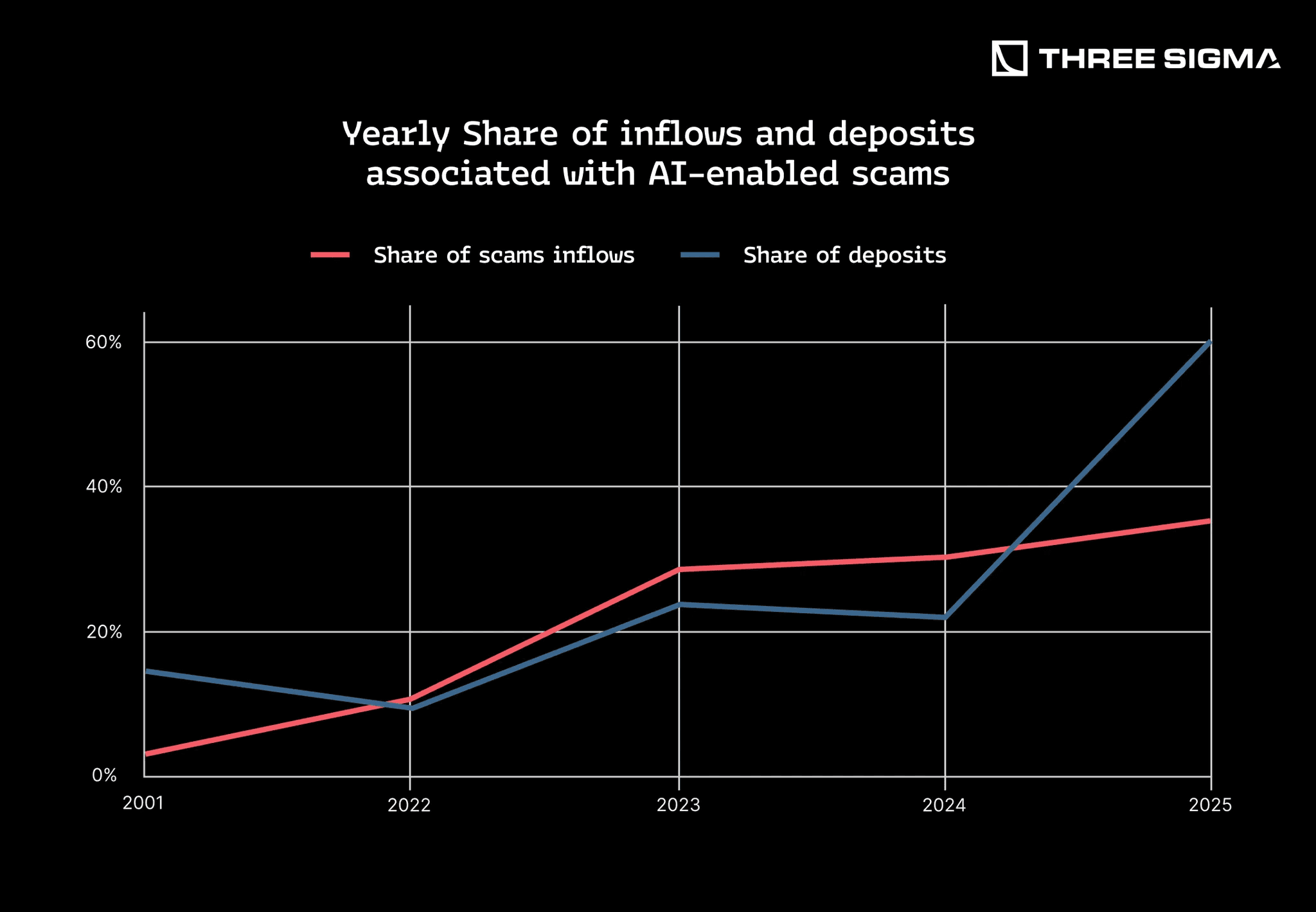

Industry data confirms a steep rise in AI-enhanced scams post-2023, with AI-related crypto phishing incidents and losses significantly outpacing traditional scams. Attackers leverage generative AI’s scalability to produce sophisticated, personalized content rapidly, vastly increasing their operational efficiency and profitability.

Real-World Impact of AI-Driven Crypto Scams

Real-world data confirms generative AI is dramatically boosting crypto scams, with blockchain forensics showing successful incidents nearly tripling (a ~200% increase) from 2024 to 2025 after the widespread adoption of AI tools. By 2025, the majority of funds entering scam addresses were connected to AI-powered schemes, and total crypto scam revenue surpassed $12 billion annually, largely driven by AI-enhanced phishing and sophisticated “pig butchering” (long-con investment scams). Darknet marketplaces selling AI-generated phishing kits, voice-cloning tools, and deepfake services reported revenue growth exceeding 1,900% since 2021, highlighting an escalating arms race among cybercriminals.

In practice, AI now powers numerous attack vectors. Phishing emails and websites use AI-generated content indistinguishable from legitimate communications. Social media deepfake videos of crypto influencers and exchange executives convincingly promote fake token giveaways or airdrops. Voice-cloning enables realistic impersonation of trusted contacts or support representatives in phone-based scams. Additionally, AI-driven multilingual chatbots infiltrate Telegram and Discord, mimicking official support and deceiving users into revealing sensitive wallet credentials. Overall, generative AI significantly enhances scam realism, making detection increasingly difficult and enabling scams to operate at unprecedented scale.

Conclusion

In conclusion, the evolution from 1990s email scams to today’s AI-powered Web3 fraud has been a story of technology leveling the playing field for attackers. Phishers have continuously adapted: embracing new communication channels (from email to Slack to Discord), exploiting human psychology (greed, fear, trust in authority), and now harnessing artificial intelligence to sharpen their deceit. Web3, with its complex user experience and irreversible transactions, presents a high-stakes arena for these scams. This first part of our “AI Phishing Frenzy” series has laid out the foundations, the historical context, the mechanics of LLM-driven phishing, the unique attack surfaces of crypto, and real examples of the threat in action. With the problem scope established, the stage is set for Part 2, where we will delve into defensive strategies and cutting-edge countermeasures to protect the Web3 ecosystem from this GenAI-powered scam wave. Stay tuned, the fight against phishing is entering a new era, and understanding the enemy is the first step in mounting an effective defense.

Security Researcher

Simeon is a blockchain security researcher with experience auditing smart contracts and evaluating complex protocol designs. He applies systematic research, precise vulnerability analysis, and deep domain knowledge to ensure robust and reliable codebases. His expertise in EVM ecosystems enhances the team’s technical capabilities.