Introduction

This blogpost is the third and final in our ongoing series, Arithmetization in STARKs, which is a high-level exploration of our paper "Study of Arithmetization Methods for STARKs".

This report compares the low-degree bounds of both methods described previously, AIR and PAIR, specifically with proximity testing in mind. It briefly discusses the computational complexity of each and how to transition between them, while also exploring a different way of employing the selector column to improve some aspects.

If you missed the first two articles of this series, which provide an introduction to AIR and to Preprocessed AIR, you can read them respectively.

Importance of Comparing Degree Bounds

In the world of cryptographic protocols, ensuring the soundness of low-degree proximity testing is crucial. To enhance this soundness, it is vital to reduce the degree bounds of certain polynomials. In this blog post, we will start by comparing the degree bounds of the Composition Polynomial, , of the Algebraic Intermediate Representation (AIR) and the Combined Constraint Polynomial, , of the Preprocessed AIR (PAIR).

The Specific Case of FRI

Let us focus on the Fast Reed-Solomon IOPP (FRI) protocol, which is commonly used in STARKs. The FRI commit phase accumulates the error of the degree-respecting projection rounds across all layers. Particularly, increasing the low-degree bound increases the FRI soundness error (something we want to minimize), for fixed batched FRI proximity parameter and rate parameter.

For more details, see the "Proximity Gaps" paper or Ulrich Haböck’s paper on FRI.

AIR versus PAIR

Given the aforementioned details, it becomes evident that comparing the degree bounds of and holds immense importance in determining the optimal approach for optimized Reed-Solomon proximity testing in STARKs. To ensure a fair comparison, the constraints used in both cases are identical and indexed by the set .

The AIR Composition Polynomial

Recall the formulation of the composition polynomial utilized in AIR as

which yields the following degree bound:

where is the trace domain and, regarding constraint , is the enforcement domain and is the AIR multivariate polynomial that expresses the operations in the constraint.

The PAIR Combined Constraint Polynomial

For the PAIR method, there is the combined constraint formulation:

that has a tight degree bound:

where is the PAIR selector polynomial, and is the enforcement domain of the constraints indexed by the set .

The mathematical intricacies of comparing these two expressions are quite technical, and we won’t bore you with them here, but if you’re interested, you can find them in our paper. In it, we have shown that the degree bound for PAIR will never be smaller than the degree bound for AIR, i.e.

In fact, in most testable cases, PAIR will result in noticeably larger degree bounds, which is bad news for single-round FRI soundness → it makes it necessary to execute more query rounds, leading to a larger proof. Therefore, in terms of optimizing low-degree proximity testing, it is not advantageous to construct a selector function to merge all constraints into a single one, rather than using separate AIR constraints with corresponding enforcement domains.

Decomposing the Selector to Optimize PAIR

By observing the formulation of , you’ll notice that this bound increases linearly with the cardinality of the image of , that is, . Let’s take a look at how we can decrease this bound through manipulation of this parameter.

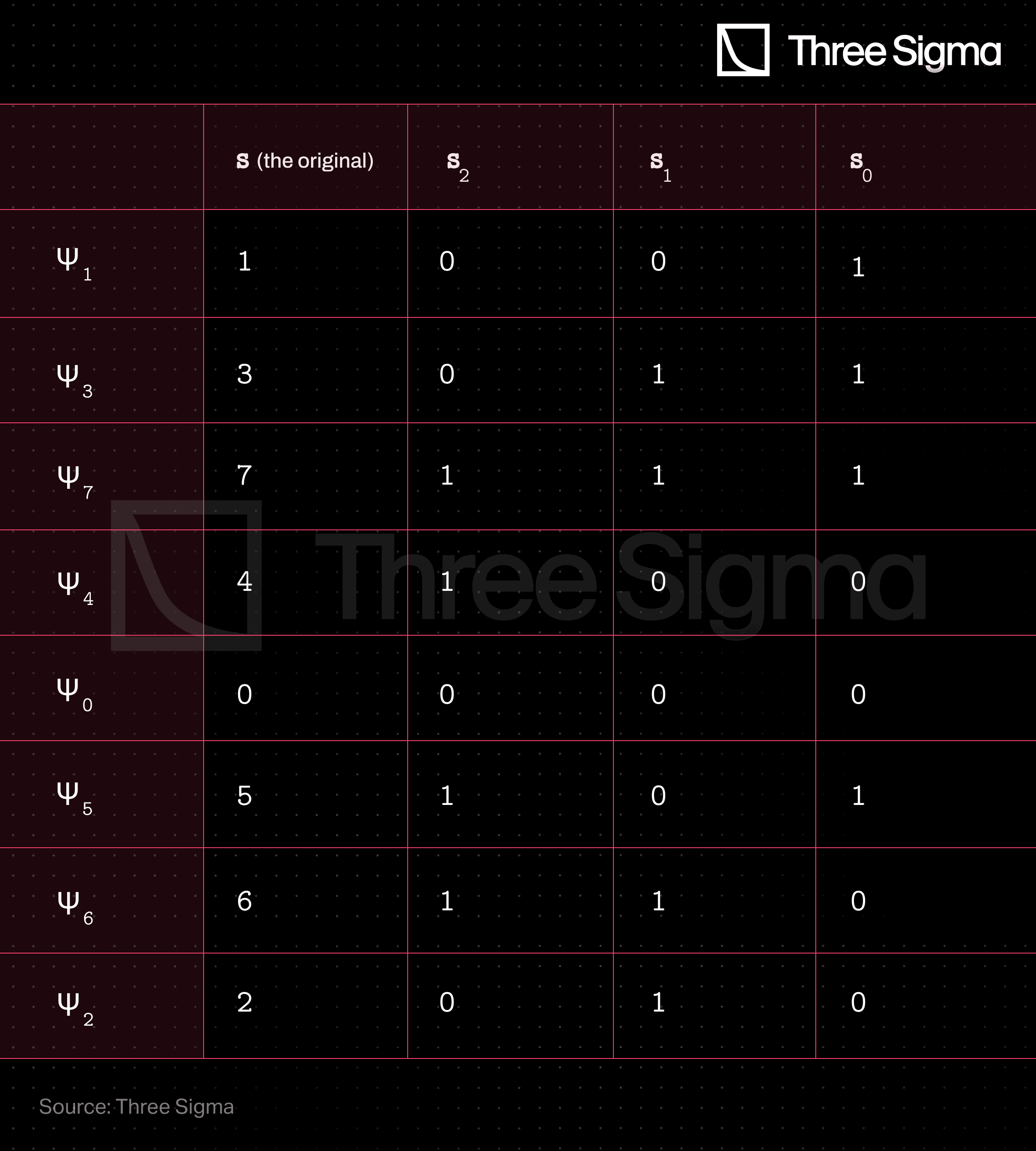

Suppose we’re dealing with 8 disjoint constraints, i.e. , without loss of generality, let us assume that . We could, as before, define a single selector column that runs through the values of , but what if instead we decomposed the selector into a binary representation with bits?

Expressly, the selector columns will flag the constraints as such:

In this adapted version of PAIR, the new combined constraint could be written as:

The difference between the degree bounds of and would be

which predicts an improvement in the degree bound whenever the average degree of the decomposed selectors, , is less than times the degree of the regular selector. While this is not an obvious enhancement of the bound, it does create a sizeable margin for optimization.

The base that you choose to decompose the selector column (in the above example ) has different impacts on the proof system that you’re building, specifically: the smaller it is, the more extra columns you will require to uniquely represent the original value - and over which you must perform the low degree extension as well - although the margin for optimization of the degree bound will be greater. One must consider these opposing effects when pondering the base.

You can look into the details of the base’s influence in our paper.

Computational Complexity

We'll now take a quick look at the computational requirements of PAIR and AIR, particularly in relation to the low-degree extension (LDE).

The LDE of the trace function , AIR composition polynomial and PAIR combined constraint polynomial , is the prover-executed evaluation of said functions over the evaluation domain. Simplifying, the evaluation domain is a large set disjoint from the smaller trace domain (check our paper for more details 😉).

The LDE is a relevant performance assessment point because it is the dominant function evaluation step in the arithmetization phase, prior to RPT, and occurs in the entirety of the evaluation domain yielding a significant computational effort.

Computational Disadvantages of LDE in PAIR

The primary drawback of the LDE in PAIR stems from the interpolation and evaluation of the additional selector polynomials. These operations impose computational overhead. Additionally, if the decomposition method is employed, it is crucial to consider the system requirements for supporting an extended trace table created by appending multiple selector columns. Furthermore, PAIR also requires evaluating the vanishing polynomials for all constraints and selector columns. However, it's important to note that AIR without selectors bypasses these issues altogether.

Drawback of the AIR Method

Conversely, the AIR method's main disadvantage lies in the necessity of computing the vanishing polynomial for each individual constraint. In contrast, PAIR combines all constraints into a single larger constraint, requiring the computation of only one vanishing polynomial. Notably, evaluating a vanishing polynomial becomes easier when its set of roots closely aligns with a multiplicative group [Medium post by StarkWare]. For instance,

which avoids the need for explicitly computing a lengthy product.

Considering Application Context and Performance Evaluation

To fully comprehend the impact of these subtle computational differences on actual performance, it is crucial to examine the specific application context of each project. Several factors come into play, including:

- Task allocation between the prover and the verifier, which involves defining criteria to strike a balance.

- Evaluating the computational requirements concerning processing power, memory, and network needs, with thorough benchmark testing.

- Conducting real-world practical testing to assess performance in a real-world environment.

Just a nice calming forest landscape, before we proceed to the final 30m of the 100m sprint.

Hybrid Approach

We have shown that the degree bound for the RPT after an AIR will always be less than or equal to the degree bound of the corresponding (regular) PAIR. However, it might be possible to enjoy some of the advantages of PAIR without deteriorating the degree bound. Specifically, a hybrid strategy would avoid this issue, if there exists a proper subset of constraints which combined using the PAIR approach leads to a degree bound which is at most the degree bound of the AIR’s composition polynomial. In that case, the PAIR procedure could be used without affecting the final low-degree bound.

To sum things up, we could build a composition polynomial using the PAIR combined constraint for better performance - mostly, by reducing the amount of vanishing polynomials in the final RPT assignment - while still optimizing low-degree proximity testing by benefiting of the same low-degree bound as in the corresponding AIR.

Transitioning back from PAIR to AIR

Let’s take a look at how we could easily convert a PAIR implementation into a corresponding AIR, with each constraint individually defined alongside its enforcement domain, rather than the unique combined PAIR constraint polynomial, .

The main components in AIR that are not present in PAIR are the vanishing polynomials of the enforcement domains. So how do we get them? Similar to the PAIR blogpost, when starting the PAIR approach, the selector values need to be publicly defined along the execution trace. These values determine the constraint being enforced at each execution trace row. To begin the transition process, these selector values are used to generate the enforcement domains as level-sets of .

Once this is done, the procedure is similar to the one in the AIR blogpost and can be summarized as:

- Apply the APR on the individual constraint polynomials using the newly defined 's.

- Perform the ALI reduction, using public randomness provided by the verifier, to build the composition polynomial.

Overall, transitioning from PAIR to AIR is simpler than the reverse, with the only major addition being an optional interaction step.

Wrapping Up

Having completed this series of blogposts, you are now more better capable at making design choices when constructing your own STARKs. Or, if you are only curious about these concepts, we hope you enjoyed yourself!

Researcher

João holds a Master's degree in aerospace engineering from Instituto Superior Técnico. He is always eager to learn and is particularly interested in the analytical aspects of various subjects. João joined the research department at Three Sigma as he considers it to be an interesting challenge that will require him to diversify his skill set. In his free time, he enjoys mountain biking and playing tennis.

Researcher

Tiago has a Bachelor's and Master's degree in aerospace engineering from Instituto Superior Técnico and profound experience in nonlinear structural dynamics from Technical University of Munich. His interests in mathematics, cryptography, and computational algorithms make him well-suited for research in algorithms for cryptographic proofs. He focuses on understanding, improving, and building Zero-Knowledge primitives such as STARKs and SNARKs, in Three Sigma’s research department, offering valuable expertise and unique perspectives on the future of crypto technology.