Introduction

Upgradeability introduces a host of pitfalls that traditional immutable contracts don’t face. In this part, we examine the most common smart contract vulnerabilities and failure modes in upgradable smart contracts. Each section covers the nature of the vulnerability, why it arises in upgradeable settings, and real-world examples of hacks or incidents (2021–2025) that exploited it. By understanding these, you’ll be better prepared to avoid them in your own contracts.

Before implementing UUPS or Transparent proxies, independent solidity audits help catch these pitfalls early. Teams building on Sui or Aptos should also consider a Move audit. Our blockchain security services help teams close these gaps fast: teams building on Solana or writing smart contracts in Rust can also consider a Rust Solidity audit.

Uninitialized Proxy / Implementation

The Vulnerability

An uninitialized contract is one where an initializer (or constructor) has never been executed. In the proxy pattern, the proxy itself typically has no constructor logic for the implementation’s state, and the implementation contract’s constructor is not used (since it’s deployed independently). Instead, the implementation usually provides an initialize() function that the proxy’s admin must call exactly once after deployment. If this step is forgotten or done incorrectly, the contract’s critical state (like owner roles, or important parameters) may still be in a default, unset state. Attackers can exploit this by calling the initializer themselves, taking control of the contract.

In a Transparent proxy, this often means the proxy was deployed and pointed at an implementation, but nobody ever called the initialization function via the proxy. In a UUPS proxy, the real danger is that the proxy itself is never initialised. Because initialize() must be invoked through the proxy (so state is written into the proxy’s storage), a forgotten call leaves critical variables such as owner or guardian at their default zero values. An attacker can then call initialize() via the proxy, set themselves as owner, and immediately call upgradeTo() to seize full control. Calling initialize() directly on the implementation contract would not help the attacker, because that writes only to the implementation’s own storage, which the proxy never reads. For instance, many OpenZeppelin-based contracts have an initializer that sets an owner variable (for Ownable) and marks the contract as initialized. If an attacker can run that on the logic contract, they could set themselves as owner of the implementation. While that doesn’t directly give control of the proxy, it can be a stepping stone to deeper exploits (especially in UUPS, as we’ll see).

Real-World Example, Wormhole (2022)

Wormhole, a major cross-chain bridge, paid a $10 million bug bounty in 2022 when a white-hat hacker discovered an uninitialized upgradeable contract in their system. The Wormhole Ethereum bridge had a UUPS-style proxy. After a routine update, one of the core contracts ended up uninitialized—a bug in their upgrade script had effectively “reset” the initialization. This opened a critical hole: the attacker (white-hat) was able to call the initialize() function on the implementation contract, making themselves the guardian (admin) of the bridge contract. With that authority, they then called the upgrade function to point the proxy to a malicious implementation under their control. The malicious implementation’s code, when invoked, simply executed a selfdestruct. So the next step was the attacker calling Wormhole’s routine upgrade entry point (submitContractUpgrade) which delegatecalled into the malicious implementation, triggering SELFDESTRUCT in the proxy’s own execution context. That opcode therefore deleted the proxy contract itself, instantly bricking the bridge; the implementation contract remained on-chain but was no longer reachable. This effectively bricked the proxy, it pointed to an address with no code. Had this been a malicious hacker, it could have permanently frozen a huge amount of user funds. Fortunately, it was a friendly hacker who alerted Wormhole, and they promptly fixed the issue (and paid the record bounty). The root cause was simply forgetting (or undoing) the initialization call on an upgrade, illustrating how one missed function call can jeopardize an entire protocol.

Another infamous case was the Parity Multisig Wallet bug (2017). Parity had a library contract (acting as shared logic) that was used by many multisig wallets a design closely related to modern proxy patterns even though Solidity’s library linkage, not an explicit proxy contract, provided the indirection layer. That library contract was deployed with an uninitialized owner. In 2017, an attacker noticed this and called the init function, becoming the owner of the library contract, which ironically allowed them to call a function that self-destructed the library. This killed the logic for all dependent wallets, permanently freezing over 500,000 ETH (no funds stolen, but irrecoverable). While that specific case wasn’t a proxy in the modern sense (it was a linked library pattern), the lesson carries: any upgradeable or reusable contract that isn’t initialized is a sitting duck. Attackers can gain privileges or break functionality.

Even beyond high-profile hacks, audits frequently catch uninitialized proxies in projects. It’s a very common oversight, especially for new developers who might deploy the proxy and forget to call the initializer, or who mistakenly call the implementation’s initialize() directly (instead of via the proxy), which doesn’t actually set the proxy’s state. Always ensure that initialization is done exactly once, and done right (via the proxy) immediately upon deployment. A thorough smart contract audit helps validate initializer patterns and proxy setup.

Re-initialization Bugs in Upgradable Smart Contracts

The Vulnerability

Closely related to missing initialization is the issue of re-initialization, when an initializer function can be invoked more than once (either by mistake or by malicious design). OpenZeppelin’s initializer modifier uses an internal state variable to prevent re-execution. However, complex inheritance or upgrades that introduce new initialization logic can lead to situations where that protection is bypassed or reset. If an attacker can somehow trigger an initializer (or a function meant to be called only once) a second time, they might be able to alter important state variables or seize control.

A common scenario is when upgrading to a new implementation that has its own initialize (or say initializeV2) to set up new variables. Developers might use OpenZeppelin’s reinitializer to allow this new init to run once. But if not carefully managed, it could reset something that lets the original init run again, or otherwise confuse the contract’s initialization status. Another scenario is where a bug in the upgrade inadvertently resets the internal “initialized” flag (for example, by reusing the Initializable storage incorrectly).

Real-World Example, AllianceBlock (2024)

In August 2024, AllianceBlock (a DeFi project) upgraded one of its staking contracts to add new token support. In the process, the developers made a critical mistake: the upgrade set the contract’s initialized boolean back to false (perhaps by deploying a new implementation without properly carrying over the flag). This meant the contract thought it was uninitialized again, so an attacker could call the initializer function a second time. By doing so, the attacker was able to reset key parameters of the staking contract, specifically, they changed the rewardToken, stakingToken, and rewardRate to values of their choosing. They set an essentially infinite reward rate linked to a governance token, and potentially could have set the staking token to a dummy value. The effect: if executed fully, the attacker could deposit a tiny amount of the fake staking token and then withdraw an astronomically large amount of reward tokens, or even more insidious, by changing the staking token address they could have locked all real staked funds (since the contract would think a different token was staked). In essence, re-initializing allowed the attacker to completely alter the contract’s core variables, undermining the protocol’s logic.

Thankfully, this exploit was caught early and prevented before funds were lost. AllianceBlock’s case underscores that re-initialization can be just as dangerous as not initializing at all. It can turn the contract logic on its head by overwriting state. Another nuance: some projects intentionally allow a “reinitializer” for new modules, but if the versions (tracking which init ran) aren’t managed correctly, an attacker might invoke an old init twice. The bottom line is initialization should happen once per intended version, and it should lock itself after use. Any upgrade that needs an init function must be handled carefully to avoid reopening this attack vector.

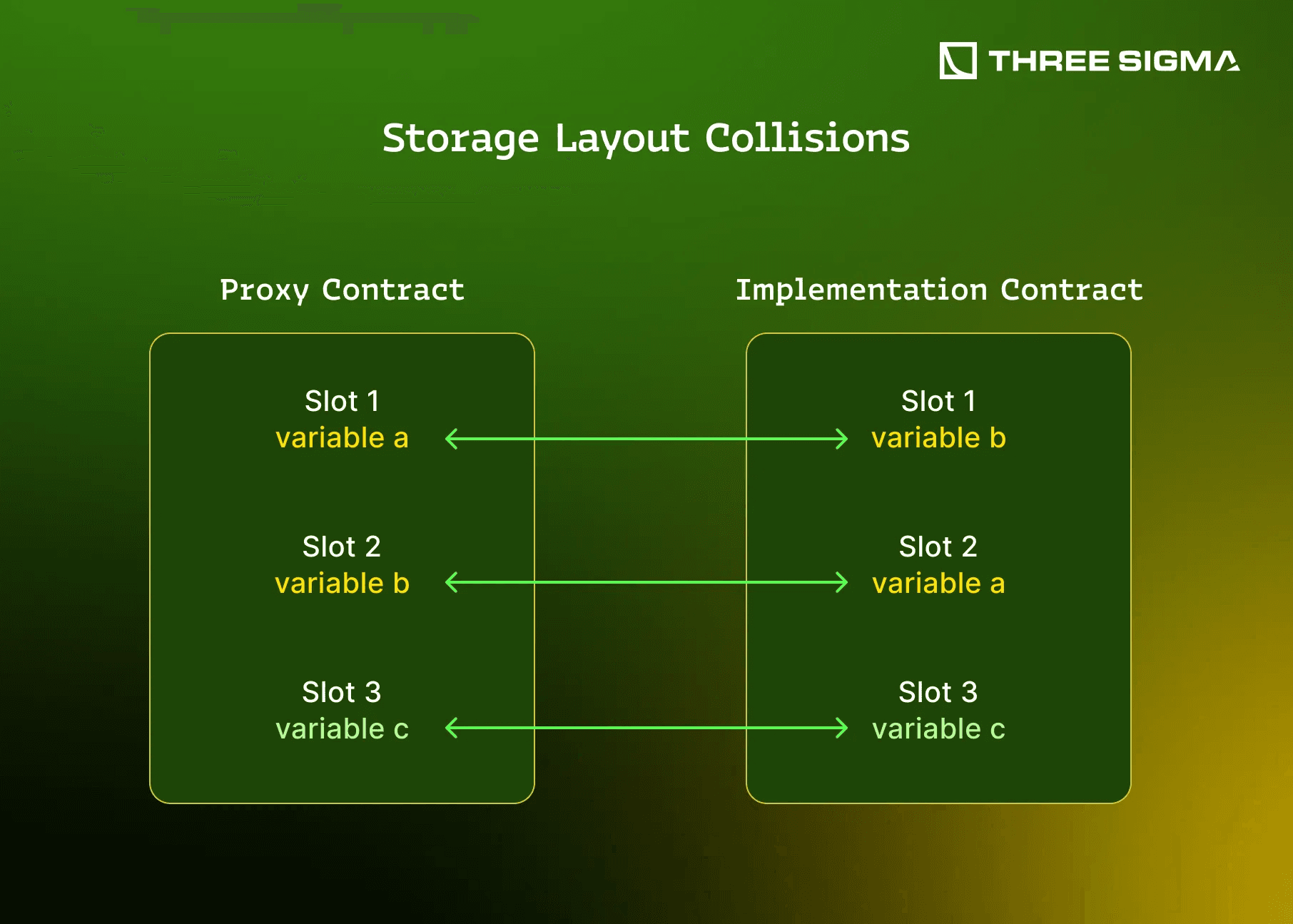

Storage Layout Collisions in Upgradable Contracts

The Vulnerability

One of the trickiest aspects of upgradable smart contracts is maintaining a consistent storage layout between the old and new implementations, as these mismatches can lead to critical smart contract vulnerabilities. The proxy’s storage is what actually persists, and it must line up with the variables in the implementation. If the ordering or structure of state variables change in an incompatible way, you get a storage collision or mismatch. This means a variable in the new implementation might read from or write to a different slot than intended, potentially corrupting data or enabling exploits.

How can this happen? If you insert a new state variable in the middle of the contract or change the type of an existing variable between upgrades, all subsequent variables shift their positions. For example, if V1 of a contract has uint256 a; bool b; and V2 accidentally inserts a new variable before a, or removes b, then the meanings of the slots get misaligned. Another source of collision is when the proxy contract itself declares a storage variable in a normal way (not in reserved slots). As noted earlier, EIP-1967 reserves three hash-derived slots for proxy metadata, making clashes with implementation storage or any other custom namespace practically impossible. If a developer ignores that and defines a state variable in the proxy, it can collide with the implementation’s slots.

Real-World Example, Audius Governance Hack (2022)

Audius, a decentralized music protocol, suffered a hack in July 2022 where an attacker stole funds from its community treasury. The root cause was traced to a storage collision introduced during an upgrade. Audius had an upgradeable governance contract (proxy + logic). In one upgrade, the developers added a new variable proxyAdmin to the proxy contract’s own storage, intending to store the admin address. However, they didn’t realize that the implementation (logic) contract’s layout now no longer aligned: the implementation had variables (including an initialized flag) that the proxy was now partially shadowing. Specifically, when the proxy tried to read the initialized flag (to check if the contract was already initialized), it instead got the value of the new proxyAdmin address (because both were at slot 0 or a related location). Since that address was non-zero, the logic thought it had never been initialized, allowing the attacker to call the initialize() function again. By re-initializing the governance, the attacker assigned themselves as the governor. From there, it was straightforward: they used governance privileges to transfer out ~$6 million worth of tokens from the treasury to their own account. All of this happened because a seemingly innocuous change, adding a proxyAdmin variable, caused a catastrophic mis-alignment between proxy and implementation storage.

The Audius incident illustrates how dangerous storage collisions can be. Data that was meant to represent one thing (a flag) was interpreted as something else (an address), bypassing security checks. Collisions can also occur without involving the proxy’s own storage, for instance, if in an upgrade you forget to include an inherited base contract’s storage. Imagine V1 inherits A (with one variable) and has its own variables. If V2 removes the inheritance of A but doesn’t account for that slot, everything shifts up by one. Subtler yet, if you use multiple inheritance, the order in which base contracts are initialized (in the linearization) matters for storage ordering; changing that can also reorder slots. Part 3 will discuss strategies like using storage “gaps” to mitigate these risks. But clearly, a storage layout mistake can be fatal, it might not be immediately obvious, but it can create a backdoor as Audius learned.

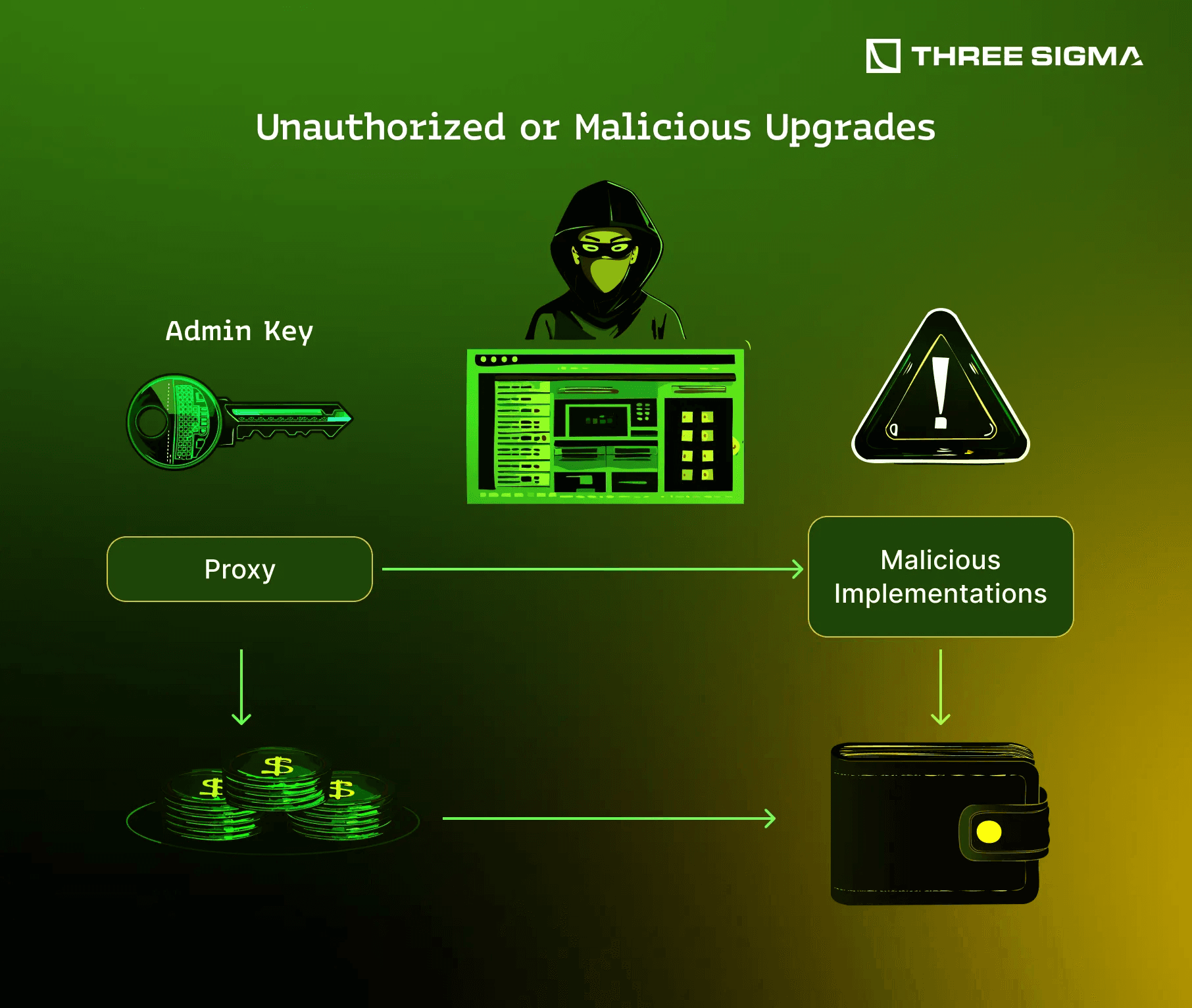

Unauthorized or Malicious Upgrades

The Vulnerability

Upgradeable contracts add an “escape hatch” to change the code, but that hatch must be secured. If the authority to upgrade (the proxy admin or the _authorizeUpgrade in UUPS implementations) falls into the wrong hands, an attacker can instantly compromise the entire system. By deploying their own malicious implementation and upgrading the proxy to point to it, they can make the contract do anything: drain funds, mint tokens, change balances, or simply sabotage the contract. This is not a theoretical risk, many exploits and even project rug-pulls have occurred via a compromised upgrade path. Mitigations like timelocks, multisig-controlled admins, and rigorous DAO audits are essential.

Common ways this vulnerability manifests:

- The private key controlling a proxy admin is hacked or phished.

- A bug in access control allows someone else to call the upgrade function (for instance, forgetting an

onlyOwneron a UUPSupgradeTo). - Intentional misuse by insiders: a developer with upgrade privileges might maliciously upgrade to a backdoored contract (insider hack or “rug pull”).

Real-World Example, PAID Network (2021)

PAID Network was exploited in March 2021 when an attacker obtained the private key for the proxy admin (reports suggest possibly through a leaked key or phishing). With control of the admin, the attacker upgraded the PAID token contract (an upgradeable proxy) to a new implementation that they had created. This malicious implementation included functions to mint a huge amount of PAID tokens to the attacker and burn tokens from others. Essentially, the attacker gave themselves a massive balance and invalidated others’ tokens, then sold the illicit tokens for profit. The code of the original PAID token had no flaws, the only flaw was that its upgrade key got compromised. Once that happened, the immutability was broken by design: the attacker could redefine what the contract does. PAID’s token lost most of its value and trust as a result. This underscores that upgradeable contracts are only as secure as the governance around upgrades. A perfectly secure logic contract means nothing if an attacker can replace it with a different logic at will. Hardening key management and access control via an OpSec audit would directly address this class of failure.

There have been numerous other incidents:

- In 2022, an attacker of the Ankr protocol stole a deployer key and used it to upgrade the implementation of Ankr’s staking token contract, inserting a function to mint 6 quadrillion tokens to themselves (basically hyperinflation), netting around $5M from the liquidity. Again, the code was fine; the admin key loss was the fatal point.

- In April 2023, the SushiSwap router contract was saved from an exploit when white-hats noticed the deployer (which had upgrade abilities) was still an EOA key that could be targeted; they urged migration to a multi-sig promptly after a related incident.

- Rug pulls: Sadly, some project creators intentionally leave an upgrade path and then exploit it themselves. They’ll deploy an innocuous contract that passes audits, then later upgrade it to malicious code that steals user funds. This has happened in various yield farming scams. The presence of an upgrade function with a single key control is often a red flag when evaluating new projects (unless mitigated by timelocks or governance).

Dangerous Use of selfdestruct or Arbitrary delegatecall

The Vulnerability

Some low-level operations in Solidity are especially dangerous in an upgradeable context. Chief among them:

selfdestruct(orSELFDESTRUCTopcode): If a logic contract self-destructs, it doesn’t directly kill the proxy (since the proxy is a separate contract). However, if the proxy tries to delegatecall to a destroyed implementation, it will find no code and the calls will fail. Essentially, selfdestruct in the implementation can “brick” the system by wiping out the code that the proxy points to. This could happen maliciously (as in the Wormhole scenario described, where the attacker upgraded to an implementation that self-destructs) or accidentally (a logic function that allows an admin to selfdestruct “when done” would be a design error in an upgradeable setting).- Unrestricted

delegatecall(orcall) to external addresses: If the implementation contract has a function that performs adelegatecallto an address supplied by the caller, this is extremely dangerous. It effectively lets the caller execute code in the context of the proxy’s state, opening the door for all sorts of exploits. Similarly, an unrestrictedaddress.call(bytes)that lets a user provide the data can be used to call arbitrary functions on other contracts, potentially with the proxy’s funds or authority. While not unique to upgradeable contracts, when such functions exist in an upgradeable logic, the attacker could even combine it with an upgrade attack (e.g. upgrade to logic that has a backdoor delegatecall).

Real-World Example, Furucombo (2021)

Furucombo was a DeFi tool that allowed users to batch operations. It wasn’t an upgradeable proxy, but it had a mechanism where it would delegatecall into handlers for different protocols. The critical mistake was it allowed an external address to be passed in as a target to delegatecall, with the assumption it was a valid handler. In 2021, an attacker crafted a malicious contract that, when delegatecalled by Furucombo, gave the attacker control over Furucombo’s own storage (including approvals). The attacker used this to make Furucombo pull users’ tokens into the attacker’s address, stealing over $14M.

While Furucombo’s scenario wasn’t about upgradeable per se, it exemplifies why unchecked delegatecalls are lethal. In an upgradeable contract, one might be tempted to write something like a generic proxy within the logic (perhaps to forward calls to a “plugin”). But if that isn’t heavily restricted, it’s introducing the same risk Furucombo had. Regarding selfdestruct: the Wormhole case above shows how an attacker can weaponize it as part of an upgrade exploit. Another minor example: some upgradable contract developers once thought of using selfdestruct to remove old implementations (to save gas or avoid confusion). This is unnecessary and dangerous, you should simply leave old implementations alone (and possibly revoke their upgrade rights by disabling initializers).

In summary, dangerous opcodes in logic contracts should be avoided or heavily gated. selfdestruct should never be in an upgradeable contract’s business logic. Delegatecalls should only be used in controlled internal patterns (like the proxy itself delegating to an implementation, or a diamond delegating to facets, those are deliberate and vetted uses). An open delegatecall is akin to handing attackers the key to your house. Part 3 will reiterate this: don’t try to get fancy with meta-programming in your implementations unless you absolutely know what you’re doing and have considered the security implications.

Conclusion

Many of these pitfalls boil down to human error and the complexity of upgrades. They underscore the need for robust processes and tooling. In the wild, several protocols have had close calls where an upgrade was performed incorrectly but luck or quick action prevented an exploit. That’s why you need a plan for rapid containment and recovery, including an established crypto emergency response partner.

In Part 3, we will convert these hard lessons into best practices, demonstrating how to properly write initializers, protect upgrade functions, use the tooling to catch storage issues, and implement secure governance. The goal is that by following those guidelines, your project won’t become the next case study in this list. Upgradeable contracts can be used safely, but as we’ve seen, mistakes can be dire. Proceed with caution, knowledge, and the right tools.

Security Researcher

Simeon is a blockchain security researcher with experience auditing smart contracts and evaluating complex protocol designs. He applies systematic research, precise vulnerability analysis, and deep domain knowledge to ensure robust and reliable codebases. His expertise in EVM ecosystems enhances the team’s technical capabilities.